Introduction

A key usability challenge for touchless gesture systems is being able to infer user intent to interact. Mid-air gesture sensing is ‘always on’ and will often detect input from people who have no intention of interacting with the touchless user interface. Incorrectly treating this data as input can lead to false-positive gesture recognition, which may lead to disruptive actions by people who had no intention of providing input.

Rhythmic gestures are touchless gestures that users repeat in a rhythmic manner, as a means of showing their intent to interact. This can help to reduce false-positive gesture recognition, as people are unlikely to perform the exact gesture in a repetitive manner, in time with the input rhythm. This is a novel form of touchless input based on spatial and temporal coincidence, rather than just using spatial information for recognition.

I first investigated rhythmic gestures during my PhD thesis, for the purpose of allowing users to direct their input towards a particular device. These were evaluated through user studies described in a CHI 2016 paper [1], which investigated different spatial patterns (e.g., linear vs circular trajectories) and rhythm tempos. Results were promising. At a similar time, work on motion correlation (inspired by gaze-based orbit interactions) started to appear, showing the potential of temporal coincidence for touchless gesture input.

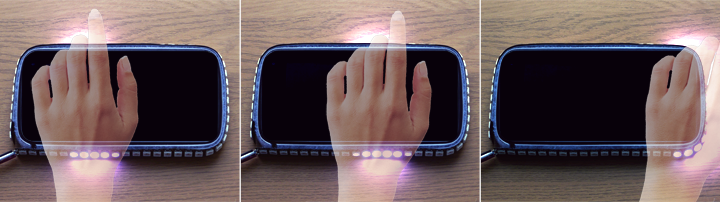

In later work at ICMI 2017 [2], we considered rhythmic micro-gestures by applying the same interaction principles to micro-movements of the hand and wrist, versus the larger hand and arm movements used in the CHI 2016 paper. The goal here was to evaluate more subtle hand movements, to use spatio-temporal coincidence for more discreet and socially acceptable input.

Research highlights:

- Rhythmic gestures for addressing in-air gesture systems [1]

- Rhythmic micro-gestures for discreet touchless input [2]

References

[1] Do That, There: An Interaction Technique for Addressing In-Air Gesture Systems

E. Freeman, S. Brewster, and V. Lantz.

In Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems – CHI ’16, 2319-2331. 2016.

@inproceedings{CHI2016,

author = {Freeman, Euan and Brewster, Stephen and Lantz, Vuokko},

booktitle = {{Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems - CHI '16}},

title = {{Do That, There: An Interaction Technique for Addressing In-Air Gesture Systems}},

year = {2016},

publisher = {ACM},

pages = {2319--2331},

doi = {10.1145/2858036.2858308},

pdf = {http://research.euanfreeman.co.uk/papers/CHI_2016.pdf},

url = {http://euanfreeman.co.uk/gestures/},

video = {{https://www.youtube.com/watch?v=6_hGbI_SdQ4}},

}[2] Rhythmic Micro-Gestures: Discreet Interaction On-the-Go

E. Freeman, G. Griffiths, and S. Brewster.

In Proceedings of 19th ACM International Conference on Multimodal Interaction – ICMI ’17, 115-119. 2017.

@inproceedings{ICMI2017,

author = {Freeman, Euan and Griffiths, Gareth and Brewster, Stephen},

booktitle = {{Proceedings of 19th ACM International Conference on Multimodal Interaction - ICMI '17}},

title = {{Rhythmic Micro-Gestures: Discreet Interaction On-the-Go}},

year = {2017},

publisher = {ACM},

pages = {115--119},

doi = {10.1145/3136755.3136815},

url = {},

pdf = {http://research.euanfreeman.co.uk/papers/ICMI_2017.pdf},

}