Introduction

An important interaction challenge with touchless gesture input is the Midas Touch problem (or false-positive recognition), which occurs when an interactive system mistakenly classifies detected movements as an intentional interaction. As an example, suppose a person waves at someone else in the room; to a touchless gesture sensor, that waving hand movement might look the same as an input gesture despite being targeted at another person. This could potentially lead to accidental input that causes an unintended effect in the system.

One solution to this problem is to employ a clutching mechanism that gives users a way of indicating their intention to interact. In this post I give a brief overview of some common clutching techniques, as evaluated in our CHI 2022 paper on clutching interactions for touchless medical imaging systems [1]. I also discuss similar interaction issues in addressing in-air gesture systems, which discusses work from my my PhD thesis and CHI 2016 paper [2].

Clutching Techniques

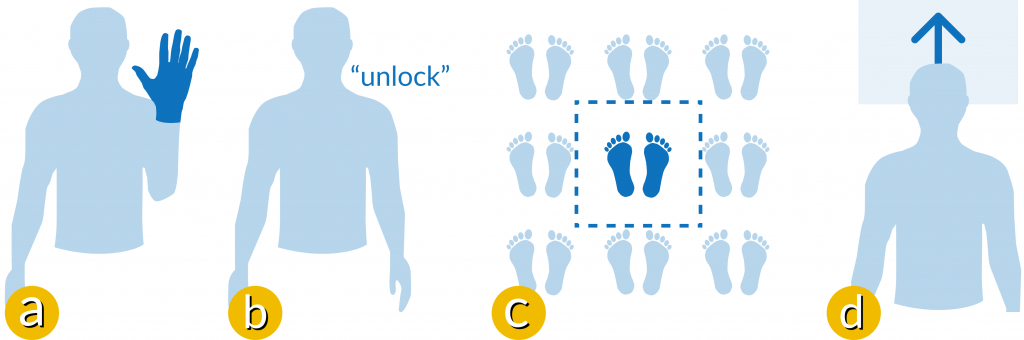

In our CHI 2022 paper [1], we evaluated four clutching interaction techniques:

- Unlock gesture: unlocks the system after a posture is held for a brief period;

- Unlock phrase: unlocks the system after a spoken request to “unlock”;

- Active zone: unlocks the system when the user stands in a designated area, where intent to interact is assumed;

- Gaze direction: unlocks the system when the user is actively looking towards the display.

This set of clutching techniques includes two explicit mechanisms (where the user performs a discrete action to unlock the system) and two implicit mechanisms (where the system is unlocked automatically when certain constraints are met).

Unlock Gesture

Gestures can be used as mode-switches, giving users a means to (un)lock further sensing by performing a particular action or posture. For this to be effective, an unlock gesture needs to be unlikely to occur in other situations (to avoid false-positive recognition). To further improve robustness, additional constraints can be introduced, e.g., holding a pose for a threshold period of time. In our study, our (un)lock mode-switch gesture was a raised hand, held steady above shoulder height for a brief period.

Unlock Phrase

Speech can be used as an alternative input modality for clutching, which provides an additional level of robustness against false-positive gesture recognition. The earliest example of this method is generally cited as the Put-That-There graphics authoring system from 1980. In that system, pointing gestures were combined with spoken commands – by using two input modalities, the system was able to determine when input gestures were intentional. In our study, we used the words “unlock” and “lock” as straightforward mode-switches.

Active Zone

The active zone is an implicit clutching mechanism that assumes the user intends to interact if their body is situated in a designated area – i.e., the “active zone“. The earliest example of this method is generally cited as the Charade gesture-controlled presentation system from 1993. Charade used a glove-based sensing device and only responded to user input when the glove was directly in front of the presentation screen – i.e., when the hand was in the active zone.

With sophisticated tracking (e.g., sensing position in room) it is possible to define active zones based on where a person is standing. We took this approach in our study, defining a 1 metre squared active zone, which was marked on the floor.

Gaze Direction

Attentional cues like eye-contact can be used to implicitly infer a user’s attention to interact. This is based on the simple assumption that if the user is glancing towards an interactive display, then they may be willing to interact with it; or, at the very least, notice when sensing is active and be cautious to avoid falsely triggering the system. A more sophisticated set of attentional cues were investigated by Schwarz et al., who also included postural cues in their “intent to interact” score. In our study, we used simple head direction to infer gaze.

References

[1] Investigating Clutching Interactions for Touchless Medical Imaging Systems

S. Cronin, E. Freeman, and G. Doherty.

In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. 2022.

@inproceedings{CHI2022Clutching,

title = {Investigating Clutching Interactions for Touchless Medical Imaging Systems},

author = {Cronin, Sean and Freeman, Euan and Doherty, Gavin},

doi = {10.1145/3491102.3517512},

publisher = {Association for Computing Machinery},

booktitle = {Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems},

numpages = {14},

series = {CHI '22},

year = {2022},

video = {https://www.youtube.com/watch?v=MFznaMUG_DU},

pdf = {http://research.euanfreeman.co.uk/papers/CHI_2022_Clutching.pdf},

}[2] Do That, There: An Interaction Technique for Addressing In-Air Gesture Systems

E. Freeman, S. Brewster, and V. Lantz.

In Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems – CHI ’16, 2319-2331. 2016.

@inproceedings{CHI2016,

author = {Freeman, Euan and Brewster, Stephen and Lantz, Vuokko},

booktitle = {{Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems - CHI '16}},

title = {{Do That, There: An Interaction Technique for Addressing In-Air Gesture Systems}},

year = {2016},

publisher = {ACM},

pages = {2319--2331},

doi = {10.1145/2858036.2858308},

pdf = {http://research.euanfreeman.co.uk/papers/CHI_2016.pdf},

url = {http://euanfreeman.co.uk/gestures/},

video = {{https://www.youtube.com/watch?v=6_hGbI_SdQ4}},

}