Introduction

Users must address a system in order to interact with it: this means discovering where and how to direct input towards the system. Sometimes this is a simple problem. For example: when using a touchscreen for input, users know to reach out and touch (how) the screen (where); when using a keyboard for input, they know to press (how) the keys (where). However, sometimes addressing a system can be complicated. When using mid-air gestures, it is not always clear where users should perform gestures and it is not clear how they direct them towards the system. My PhD thesis [1] looked at the problem of addressing in-air gesture systems and investigated interaction techniques that help users do this.

Finding where to perform gestures

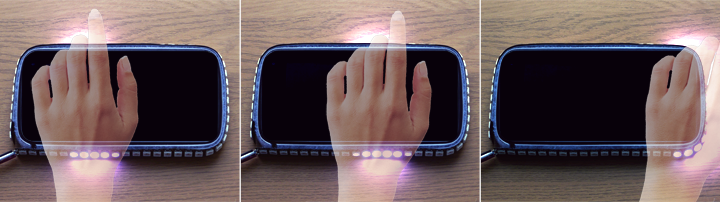

Users need to know where to perform gestures, so that their actions can be sensed by the input device. If users’ movements can not be sensed, then their actions will have no effect on the system. Some gesture systems help users find where to gesture by showing them what the input device can see. For example, the images below show feedback that shows the user what the input device can “see”. If the user starts gesturing outside of the sensor range, he or she knows to move their body so that the input device can see them properly.

Not all systems are able to give this type of feedback. For example, if a system has no screen or only has a small display, then it may not be able to give such detailed feedback. My thesis investigated an interaction technique (sensor strength) that uses light, sound and vibration to help users find where to gesture. This means that systems do not need to show feedback on a screen. Sensor strength tells users how close their hand is to a “sweet spot” in the sensor space, which is a location where they can be seen easily by the sensors. If users are close to the sweet spot, they are more likely to be sensed correctly. If they are not close to it, their gestures may not be detected by the input sensors. My thesis [1] and my CHI 2016 paper [2] describe an evaluation of this technique. The results show that users could find the sweet spot with 51-80mm accuracy. In later work, we investigated how similar principles could be used in an ultrasound haptic interface [3].

Discovering how to direct input

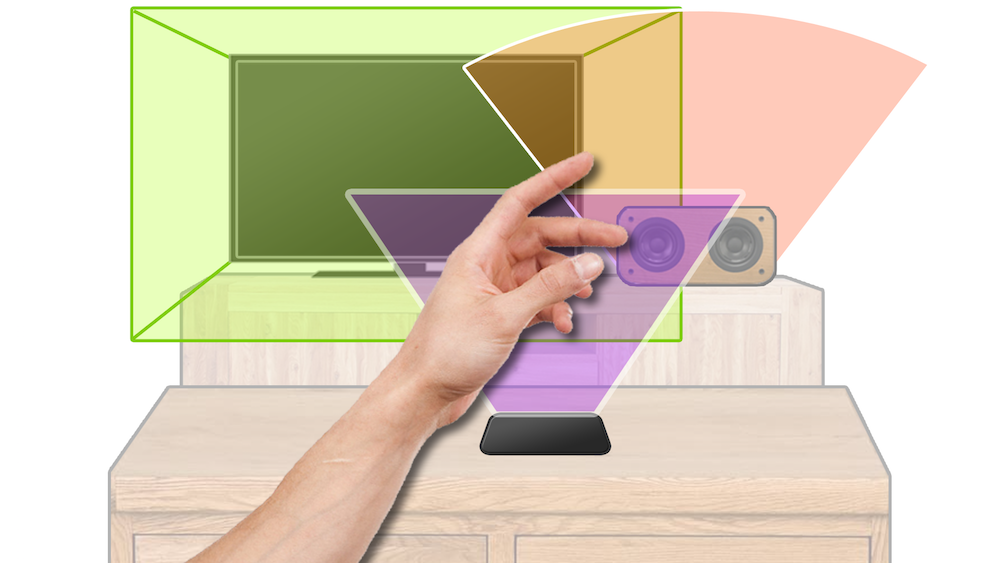

Users have to discover how to direct their gestures towards the system they want to interact with. This might not be necessary if there is only one gesture-sensing device in the room. However, in future there may be many devices which could all be detecting gestures at the same time; the image below illustrates this type of situation. Already, our mobile phones, televisions, game systems and laptops have the ability to sense gestures, so this problem may be closer than expected.

If gestures are being sensed by many devices at the same time, users may affect one (or more) systems unintentionally. This is called the Midas Touch problem. Other researchers have developed clutching techniques designed to overcome the Midas Touch problem, although these are not always practical if there is more than one gesture system at a time. My thesis investigated an alternative interaction technique, Rhythmic Gestures, which allow users to direct their input towards the one system they wish to interact with, via spatial and temporal coincidence (or motion correlation). This interaction technique can be used by many systems at once with little interference.

Rhythmic gestures

Rhythmic gestures are simple hand movements that are repeated in time with an animation, shown to the user on a visual display. These gestures consist of a movement and an interval: for example, a user may move their hand repeatedly from side to side, every 500ms, or they may move their hand up and down, every 750ms. The image below shows how an interactive light display could be used to show users a side-to-side gesture movement. The animations could also be shown on the screen, if necessary. Users can perform a rhythmic gesture by following the animation, in time, with their hand.

This interaction technique can be used to direct input. If every gesture-sensing system looks for a different rhythmic gesture, then users can use that gesture to show which system they want to interact with. This overcomes the Midas Touch problem, as it informs the other systems that input is not intended for them. My thesis [1] and CHI 2016 paper [2] describe evaluations of this interaction technique. I found that users could successfully perform rhythmic gestures, even without feedback about their hand movements.

Rhythmic micro-gestures

I extended the rhythmic gesture concept to use micro-gesture movements, very small movements of the hand. For example, tapping the thumb off the side of the hand or opening and closing all fingers at once. These could be especially useful for interacting discreetly with mobile devices while in public or on-the-go. My ICMI 2017 paper [4] describes a user study into their performance, finding that users could use them successfully with just audio feedback to convey the rhythm.

Videos

A video demonstration of the interaction techniques described here:

The 30-second preview video for the CHI 2016 paper:

Summary

To address an in-air gesture system, users need to know where to perform gestures and they need to know how to direct their input towards the system. My research investigated two techniques (sensor strength and rhythmic gestures) that help users solve these problems. I evaluated the techniques individually and found them successful. I also combined them, creating a single technique which shows users how to direct their input while also helping them find where to gesture. Evaluation of the combined technique found that performance was also good, with users rating the interaction as easy to use. Together, these techniques could be used to help users address in-air gesture systems.

References

[1] Interaction techniques with novel multimodal feedback for addressing gesture-sensing systems

E. Freeman.

In PhD Thesis, University of Glasgow. 2016.

@inproceedings{Thesis,

author = {Freeman, Euan},

title = {{Interaction techniques with novel multimodal feedback for addressing gesture-sensing systems}},

booktitle = {PhD Thesis, University of Glasgow},

year = 2016,

month = 3,

url = {http://theses.gla.ac.uk/7140/},

pdf = {http://theses.gla.ac.uk/7140/},

}[2] Do That, There: An Interaction Technique for Addressing In-Air Gesture Systems

E. Freeman, S. Brewster, and V. Lantz.

In Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems – CHI ’16, 2319-2331. 2016.

@inproceedings{CHI2016,

author = {Freeman, Euan and Brewster, Stephen and Lantz, Vuokko},

booktitle = {{Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems - CHI '16}},

title = {{Do That, There: An Interaction Technique for Addressing In-Air Gesture Systems}},

year = {2016},

publisher = {ACM},

pages = {2319--2331},

doi = {10.1145/2858036.2858308},

pdf = {http://research.euanfreeman.co.uk/papers/CHI_2016.pdf},

url = {http://euanfreeman.co.uk/gestures/},

video = {{https://www.youtube.com/watch?v=6_hGbI_SdQ4}},

}[3] HaptiGlow: Helping Users Position their Hands for Better Mid-Air Gestures and Ultrasound Haptic Feedback

E. Freeman, D. Vo, and S. Brewster.

In Proceedings of IEEE World Haptics Conference 2019, the 8th Joint Eurohaptics Conference and the IEEE Haptics Symposium, TP2A.09. 2019.

@inproceedings{WHC2019,

author = {Freeman, Euan and Vo, Dong-Bach and Brewster, Stephen},

booktitle = {{Proceedings of IEEE World Haptics Conference 2019, the 8th Joint Eurohaptics Conference and the IEEE Haptics Symposium}},

title = {{HaptiGlow: Helping Users Position their Hands for Better Mid-Air Gestures and Ultrasound Haptic Feedback}},

year = {2019},

publisher = {IEEE},

pages = {TP2A.09},

doi = {10.1109/WHC.2019.8816092},

url = {http://euanfreeman.co.uk/haptiglow/},

pdf = {http://research.euanfreeman.co.uk/papers/WHC_2019.pdf},

data = {https://zenodo.org/record/2631398},

video = {https://www.youtube.com/watch?v=hXCH-xBgnig},

}[4] Rhythmic Micro-Gestures: Discreet Interaction On-the-Go

E. Freeman, G. Griffiths, and S. Brewster.

In Proceedings of 19th ACM International Conference on Multimodal Interaction – ICMI ’17, 115-119. 2017.

@inproceedings{ICMI2017,

author = {Freeman, Euan and Griffiths, Gareth and Brewster, Stephen},

booktitle = {{Proceedings of 19th ACM International Conference on Multimodal Interaction - ICMI '17}},

title = {{Rhythmic Micro-Gestures: Discreet Interaction On-the-Go}},

year = {2017},

publisher = {ACM},

pages = {115--119},

doi = {10.1145/3136755.3136815},

url = {},

pdf = {http://research.euanfreeman.co.uk/papers/ICMI_2017.pdf},

}