Hand Position is Important

Hand position is important for mid-air interaction because it affects the quality of input sensing. If hands are too close to, or too far from, the sensor, then it may have difficulty tracking them accurately and gestures may not be recognised. The space where gestures can be sensed is invisible and often ambiguous, especially when different sensing technologies are used, so we can’t assume users know where to place their hand.

Hand position is also important for mid-air haptic feedback because it affects the quality of the haptics. For example, ultrasound haptics needs sufficient distance for the ultrasound waves to focus, but weakens if the hand is too far from the device. Different haptic technologies cover a broad range of distances, requiring different hand positions to feel the strongest feedback.

I’ve written about the importance of hand position before (see: Addressing Mid-Air Gesture Systems) but on this page, I describe my latest work on this aspect of mid-air user interface design. This work has been accepted as a full paper at the IEEE World Haptics Conference 2019.

HaptiGlow: Helping Users Position their Hands for Better Mid-Air Gestures and Ultrasound Haptic Feedback

E. Freeman, D. Vo, and S. Brewster.

In Proceedings of IEEE World Haptics Conference 2019, the 8th Joint Eurohaptics Conference and the IEEE Haptics Symposium, TP2A.09. 2019.

@inproceedings{WHC2019,

author = {Freeman, Euan and Vo, Dong-Bach and Brewster, Stephen},

booktitle = {{Proceedings of IEEE World Haptics Conference 2019, the 8th Joint Eurohaptics Conference and the IEEE Haptics Symposium}},

title = {{HaptiGlow: Helping Users Position their Hands for Better Mid-Air Gestures and Ultrasound Haptic Feedback}},

year = {2019},

publisher = {IEEE},

pages = {TP2A.09},

doi = {10.1109/WHC.2019.8816092},

url = {http://euanfreeman.co.uk/haptiglow/},

pdf = {http://research.euanfreeman.co.uk/papers/WHC_2019.pdf},

data = {https://zenodo.org/record/2631398},

video = {https://www.youtube.com/watch?v=hXCH-xBgnig},

}Guiding Users to Better Hand Positions

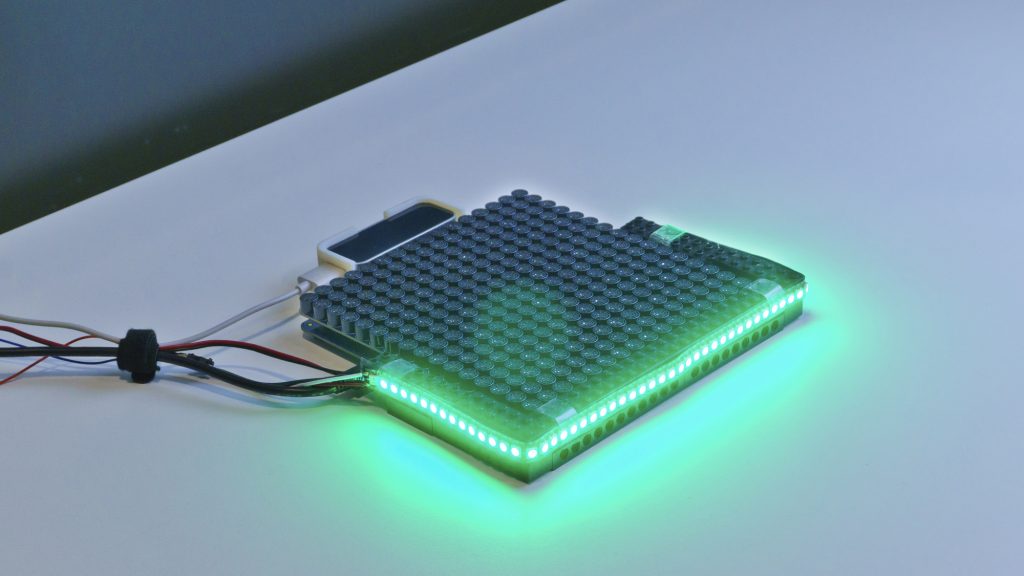

We created a system called HaptiGlow that helps users find where to position their hands for optimal mid-air interaction. HaptiGlow uses lights placed around the edge of an ultrasound haptics device (from Ultrahaptics) for peripheral visual feedback. This visual feedback is combined with ultrasound haptic feedback, for multimodal cues to guide hand movements.

Both feedback modalities give information about hand position, helping users find the location where sensing is more robust and haptic feedback feels stronger. This helps users address the system when they initiate interaction, a necessary first step that precedes task-oriented gestures (e.g., manipulating control widgets or issuing a command) and will improve usability, the input quality, and haptic perception.

HaptiGlow

The HaptiGlow system consists of an Ultrahaptics UHEV1 device, partially surrounded by Adafruit NeoPixel LEDs. The LEDs are controlled by an Arduino Uno and a Leap Motion sensor tracks hand position over the haptics device.

A core part of interaction with HaptiGlow is the concept of a sweet-spot, a position which is optimal for mid-air input and output. In this case, the sweet-spot is approximately 12-15cm above the Ultrahaptics device. At this height, the users’ hands can be robustly tracked by the input sensor, and this is also where the ultrasound haptic feedback will feel strongest.

Our visual feedback was designed to help users find the sweet-spot as they move their hands over the device. The colour of the LEDs changes from white to green as the hand moves towards the sweet-spot. This is implemented as a simple interpolation between white and green, based on the 3D distance between hand position and sweet-spot.

Our haptic feedback is designed so that users feel stronger feedback as their hand approaches the sweet-spot. We created a haptic ‘cone’, which is centred at the sweet-spot. The circular cross-section is rendered against the palm of the user’s hand, so that the cone is always oriented towards them. As they approach the sweet-spot, the cross-section of the cone increases. The greater area of stimulation creates the perception of stronger feedback.

User Study

We conducted an experiment to evaluate how well HaptiGlow could guide users’ hand positions in mid-air. For each task, we placed a random target point above the Ultrahaptics device. Users were asked to use the feedback to locate the target point, “as quickly and as accurately as possible”. Each task ended when their other hand pressed a large button.

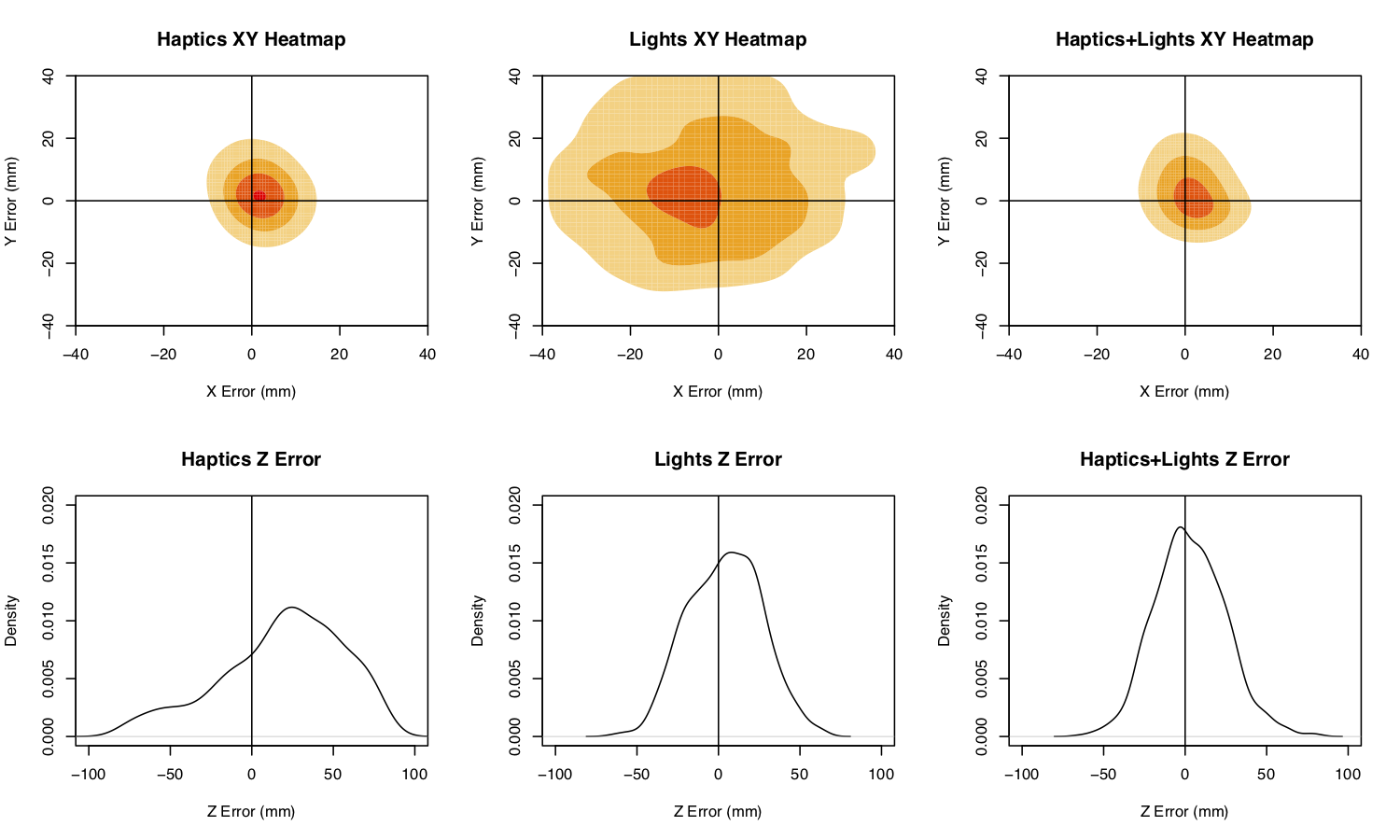

Our experiment looked at the performance of haptic feedback on its own, visual feedback on its own, and the combination of both modalities. The following image shows the error between the final hand position and the target position. The top row shows error in the XY plane (horizontal position above the ultrasound haptics device); the bottom row shows error in terms of height above the device.

Our results show that haptic feedback was really effective for getting the hand in the right position over the array, but participants consistently placed their hand too high. Visual feedback was unsurprisingly less effective in the XY plane. This is unsurprising because the visual feedback encoded 3D information using only one dimension of output (i.e., colour interpolated between white and green). However, the visual feedback was very effective at getting the hand at the right height above the device.

The best performance came in the multimodal feedback condition. Users took advantage of the strengths of each individual modality, using haptics to locate their hand position over the array and visual feedback to find the optimal hand height. Several participants reported using each modality in this way, which is reflected in the results.

Data from this user study is openly available under a CC BY 4.0 license: https://doi.org/10.5281/zenodo.2631397

Summary

Hand position is important for high quality input and mid-air haptic output. We developed a simple, low-cost method of enhancing an ultrasound haptic display with peripheral visual feedback, as a means of helping users find where to place their hand for optimal interaction. Our results support the combination of these modalities, leading to fast and effective hand placement above an ultrasound haptics device.

Acknowledgements

This research has received funding from the 🇪🇺 European Union’s Horizon 2020 research and innovation programme under grant agreement #737087. This work was completed as part of the Levitate project.