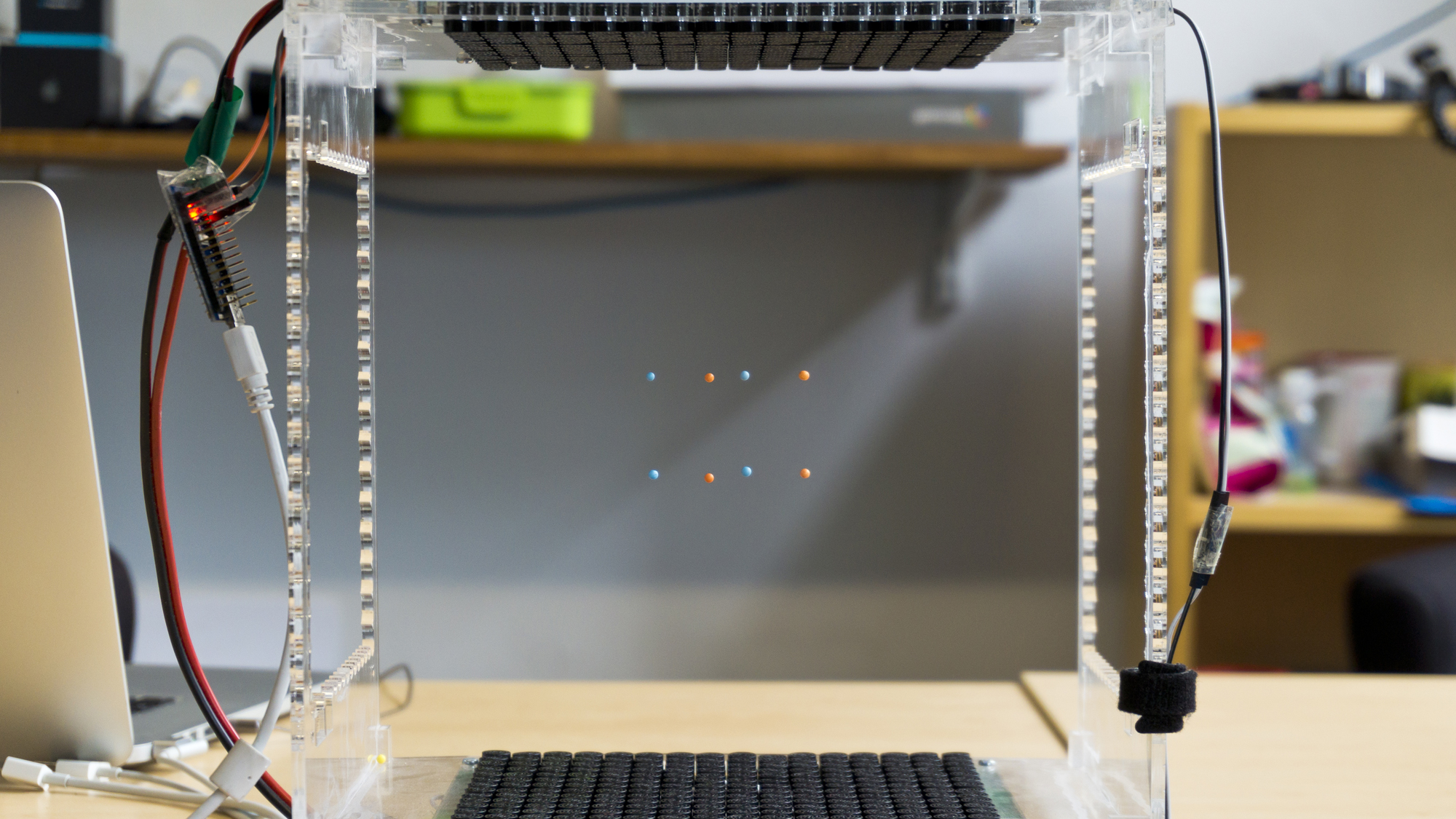

The Levitate project is investigating a novel type of display where interactive content is composed of levitating objects. We use ultrasound to levitate and move “particles” in mid-air. These acoustically-levitated particles are the basic display elements in a levitating object display.

Levitating object displays have some interesting properties:

- Content is composed of physical objects, actuated by an invisible matter with no mechanical constraints (sound waves);

- Users can see through the content to view and interact with it from many angles;

- They can interact inside the display volume, using the space around and between the particles to manipulate and interact with content;

- Non-interactive objects and materials can be combined with levitation, enabling new interactive experiences;

- Mid-air haptics and directional audio can be presented using similar acoustic principles and hardware, for multimodal content in a single display.

Levitating object displays enable new interaction techniques and applications, allowing users to interact with content in new ways. One of the aims of the Levitate project is to explore new applications and develop novel techniques for interacting with levitating objects. Whilst we focus on acoustic levitation, these techniques are also relevant to other types of mid-air display (e.g., using magnetic levitation, drones, AR headsets).

What does levitation look like?

Levitating object displays

These are a novel type of display, where content is composed of levitating objects. With the current state-of-the-art, up to a dozen objects can be levitated and moved independently. Advances in ultrasound field synthesis and improved hardware will enable these displays to support many more display elements. Our project is also advancing the state-of-the-art in acoustics to allow this.

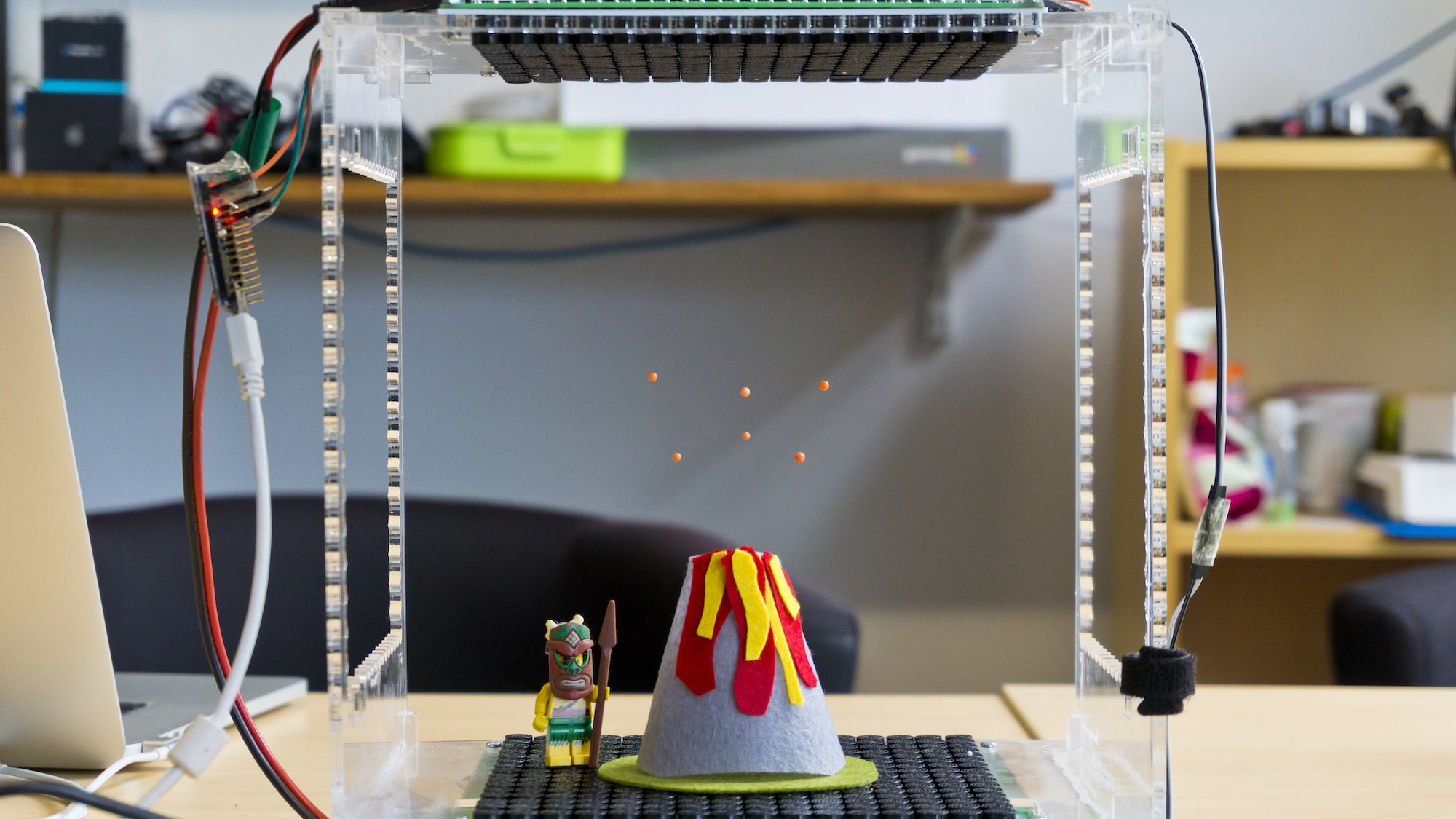

Levitating objects could represent data through their positions in mid-air and could be used to create physical 3D representations of data (i.e., physical visualisations). Acoustic levitation is dynamic, so object movement can be used in meaningful ways to create dynamic data displays. For example, using the levitating particles to show simulations of astronomical and physical data.

As the Levitate project progresses, we’ll be creating new interactive demonstrators to showcase some novel applications enabled by this technology. See Levitating Particle Displays for a summary of our Pervasive Displays 2018 paper outlining the display capabilities enabled by levitation.

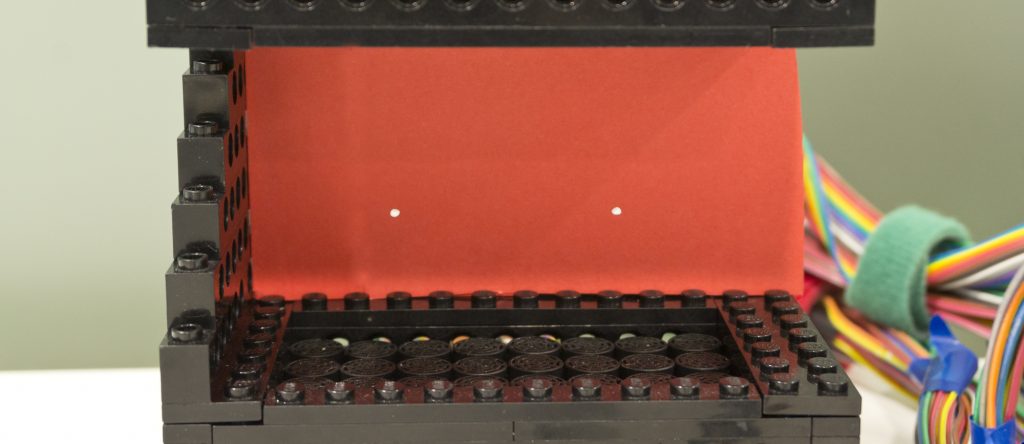

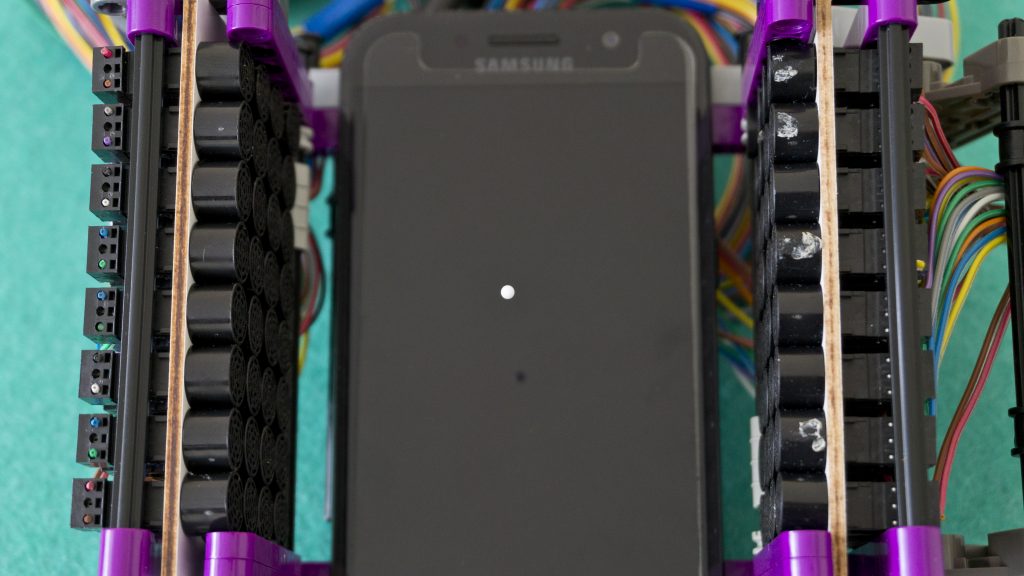

Levitation Around Physical Objects

My work has been exploring alternative ways of producing content using levitating particles. Instead of creating composite objects composed of several particles (like the cube shown before), we can also levitate particles in the space around other physical objects.

This display concept offers new possibilities for content creation. Interactivity can be added to otherwise non-interactive objects, because the levitating particles can be actuated without having to modify or instrument the original object. There are no mechanical constraints, so the levitating particles can be used to create dynamic visual content in the space surrounding the objects. Despite their simple appearance, the particles can be used in expressive ways, because their context adds meaning to their position and behaviour.

This display concept is discussed in a full paper accepted to Pervasive Displays 2019. See Enhancing Physical Objects with Actuated Levitating Particles for more about my work in this area.

Interacting with levitating objects

One of my aims on this project is to develop new interaction techniques and applications based on levitation. We started by focusing on object selection, since this is a fundamental interaction. Selection is necessary for other actions to take place (e.g., moving an object or querying its value).

We developed a technique called Point-and-Shake, which combines ray-cast pointing input with object shaking as a feedback mechanism. Basically, users point at the object they want to select and it shakes to give feedback. You can read more about this technique here. A paper describing our selection technique was published at CHI 2018. The following video includes a demonstration of Point-and-Shake:

Sound and haptics

Another aim of the project is to develop multimodal interactions using sound and haptics, to enhance the experience of interacting with the levitating objects. This is a multidisciplinary project involving experts in acoustics, HCI, and ultrasound haptics. The hardware and acoustic techniques used for acoustic levitation are similar to those used for ultrasound haptics and directional audio, so the project aims to combine these modalities to create new multimodal experiences.

Research summaries

- Point-and-Shake: Selecting Levitating Objects

- Levitating Particle Displays

- Enhancing Physical Objects with Actuated Levitating Particles

- HaptiGlow: Positioning Hands for Optimal Mid-Air Interaction

Engage

- Website: www.levitateproject.com

- Twitter: @LevitateProj

Highlights

- Jan 2020: Demo and LBW papers accepted to ACM CHI ’20.

- Jul 2019: Full paper presented at IEEE World Haptics ’19.

- Jun 2019: Full paper presented at ACM Pervasive Displays ’19.

- May 2019: Demo at ACM CHI ’19.

- Nov 2018: Demo at SICSA DemoFest ’18.

- Jun 2018: Full paper presented at Pervasive Displays ’18.

- Apr 2018: Full paper presented at CHI ’18.

- Nov 2017: Demo at ACM ICMI ’17.

- Oct 2017: Demos at SICSA DemoFest ’17 and ACM ISS ’17.

- Jun 2017: Demo at Pervasive Displays ’17.

- Jan 2017: Start of project,

Acknowledgements

This research has received funding from the 🇪🇺 European Union’s Horizon 2020 research and innovation programme under grant agreement #737087.

Publications

Enhancing Physical Objects with Actuated Levitating Particles

E. Freeman, A. Marzo, P. B. Kourtelos, J. R. Williamson, and S. Brewster.

In Proceedings of the 8th ACM International Symposium on Pervasive Displays – PerDis ’19, Article 2. 2019.

@inproceedings{PerDis2019,

author = {Freeman, Euan and Marzo, Asier and Kourtelos, Praxitelis B. and Williamson, Julie R. and Brewster, Stephen},

booktitle = {{Proceedings of the 8th ACM International Symposium on Pervasive Displays - PerDis '19}},

title = {{Enhancing Physical Objects with Actuated Levitating Particles}},

year = {2019},

publisher = {ACM},

pages = {Article 2},

url = {http://euanfreeman.co.uk/levitate/enhancing-physical-objects-with-actuated-levitating-particles/},

pdf = {http://research.euanfreeman.co.uk/papers/PerDis_2019.pdf},

doi = {10.1145/3321335.3324939},

video = {https://www.youtube.com/watch?v=5vZwTvfWZgo},

} Three-in-one: Levitation, Parametric Audio, and Mid-Air Haptic Feedback

G. Shakeri, E. Freeman, W. Frier, M. Iodice, B. Long, O. Georgiou, and C. Andersson.

In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems – CHI EA ’19, Paper INT006. 2019.

@inproceedings{CHI2019Demo1,

author = {Shakeri, G\"{o}zel and Freeman, Euan and Frier, William and Iodice, Michele and Long, Benjamin and Georgiou, Orestis and Andersson, Carl},

booktitle = {{Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems - CHI EA '19}},

title = {{Three-in-one: Levitation, Parametric Audio, and Mid-Air Haptic Feedback}},

year = {2019},

publisher = {ACM},

pages = {Paper INT006},

doi = {10.1145/3290607.3313264},

pdf = {https://dl.acm.org/authorize?N673841},

} Tangible Interactions with Acoustic Levitation

A. Marzo, S. Kockaya, E. Freeman, and J. Williamson.

In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems – CHI EA ’19, Paper INT005. 2019.

@inproceedings{CHI2019Demo2,

author = {Marzo, Asier and Kockaya, Steven and Freeman, Euan and Williamson, Julie},

booktitle = {{Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems - CHI EA '19}},

title = {{Tangible Interactions with Acoustic Levitation}},

year = {2019},

publisher = {ACM},

pages = {Paper INT005},

doi = {10.1145/3290607.3313265},

pdf = {https://dl.acm.org/authorize?N673840},

} Levitating Particle Displays with Interactive Voxels

E. Freeman, J. Williamson, P. Kourtelos, and S. Brewster.

In Proceedings of the 7th ACM International Symposium on Pervasive Displays – PerDis ’18, Article 15. 2018.

@inproceedings{PerDis2018,

author = {Freeman, Euan and Williamson, Julie and Kourtelos, Praxitelis and Brewster, Stephen},

booktitle = {{Proceedings of the 7th ACM International Symposium on Pervasive Displays - PerDis '18}},

title = {{Levitating Particle Displays with Interactive Voxels}},

year = {2018},

publisher = {ACM},

pages = {Article 15},

doi = {10.1145/3205873.3205878},

url = {http://euanfreeman.co.uk/levitate/levitating-particle-displays/},

pdf = {http://research.euanfreeman.co.uk/papers/PerDis_2018.pdf},

} Point-and-Shake: Selecting from Levitating Object Displays

E. Freeman, J. Williamson, S. Subramanian, and S. Brewster.

In Proceedings of the 36th Annual ACM Conference on Human Factors in Computing Systems – CHI ’18, Paper 18. 2018.

@inproceedings{CHI2018,

author = {Freeman, Euan and Williamson, Julie and Subramanian, Sriram and Brewster, Stephen},

booktitle = {{Proceedings of the 36th Annual ACM Conference on Human Factors in Computing Systems - CHI '18}},

title = {{Point-and-Shake: Selecting from Levitating Object Displays}},

year = {2018},

publisher = {ACM},

pages = {Paper 18},

doi = {10.1145/3173574.3173592},

url = {http://euanfreeman.co.uk/levitate/},

video = {{https://www.youtube.com/watch?v=j8foZ5gahvQ}},

pdf = {http://research.euanfreeman.co.uk/papers/CHI_2018.pdf},

data = {https://zenodo.org/record/2541555},

} Textured Surfaces for Ultrasound Haptic Displays

E. Freeman, R. Anderson, J. Williamson, G. Wilson, and S. Brewster.

In Proceedings of 19th ACM International Conference on Multimodal Interaction – ICMI ’17 Demos, 491-492. 2017.

@inproceedings{ICMI2017Demo,

author = {Freeman, Euan and Anderson, Ross and Williamson, Julie and Wilson, Graham and Brewster, Stephen},

booktitle = {{Proceedings of 19th ACM International Conference on Multimodal Interaction - ICMI '17 Demos}},

title = {{Textured Surfaces for Ultrasound Haptic Displays}},

year = {2017},

publisher = {ACM},

pages = {491-492},

doi = {10.1145/3136755.3143020},

url = {},

pdf = {http://research.euanfreeman.co.uk/papers/ICMI_2017_Demo.pdf},

} Floating Widgets: Interaction with Acoustically-Levitated Widgets

E. Freeman, R. Anderson, C. Andersson, J. Williamson, and S. Brewster.

In Proceedings of ACM International Conference on Interactive Surfaces and Spaces – ISS ’17 Demos, 417-420. 2017.

@inproceedings{ISS2017Demo,

author = {Freeman, Euan and Anderson, Ross and Andersson, Carl and Williamson, Julie and Brewster, Stephen},

booktitle = {{Proceedings of ACM International Conference on Interactive Surfaces and Spaces - ISS '17 Demos}},

title = {{Floating Widgets: Interaction with Acoustically-Levitated Widgets}},

year = {2017},

publisher = {ACM},

pages = {417-420},

doi = {10.1145/3132272.3132294},

url = {},

pdf = {http://research.euanfreeman.co.uk/papers/ISS_2017_Demo.pdf},

} Levitate: Interaction with Floating Particle Displays

J. R. Williamson, E. Freeman, and S. Brewster.

In Proceedings of the 6th ACM International Symposium on Pervasive Displays – PerDis ’17 Demos, Article 24. 2017.

@inproceedings{PerDis2017Demo,

author = {Williamson, Julie R. and Freeman, Euan and Brewster, Stephen},

booktitle = {{Proceedings of the 6th ACM International Symposium on Pervasive Displays - PerDis '17 Demos}},

title = {{Levitate: Interaction with Floating Particle Displays}},

year = {2017},

publisher = {ACM},

pages = {Article 24},

doi = {10.1145/3078810.3084347},

url = {http://dl.acm.org/citation.cfm?id=3084347},

pdf = {http://research.euanfreeman.co.uk/papers/PerDis_2017.pdf},

}