I have worked in many areas of Human-Computer Interaction, including: accessible technology, wearable devices, gesture interaction, ultrasound haptics, and novel displays. This page gives a broad overview of my research.

- Acoustic Levitation

- Ultrasound Haptics

- Gesture Interaction

- Wearables for Visually Impaired Children

- Interactive Tabletops in the Home

- Digital Pen and Paper Reminders

- Visual Complexity

- Graph Aesthetics

Acoustic Levitation

I worked on the Levitate project, a four year EU FET-Open project which investigated novel interfaces composed of objects levitating in mid-air. My research on this project was mostly focused on developing new interaction techniques for levitating object displays [1]; for example, Point-and-Shake, a selection and feedback technique presented at CHI 2018 [2].

Ultrasound Haptic Feedback

I have been working with ultrasound haptics since 2012, when I first experienced the technology at a workshop hosted by Tom Carter and Sriram Subramanian. I contributed to a review of ultrasound haptics in 2020 [3] which I recommend for an overview of this awesome technology.

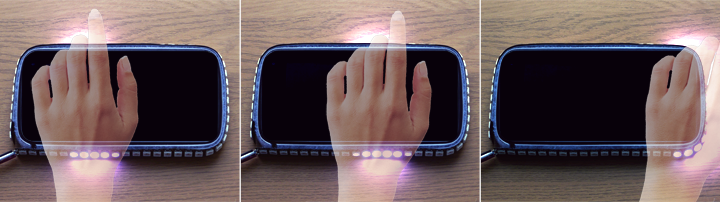

My ICMI 2014 paper [4] used an early prototype of an Ultrahaptics device to give haptic feedback about mid-air gesture input for smartphones. My IEEE World Haptics Conference 2019 paper [5] was about helping users position their hands for optimal ultrasound haptic output.

I have two papers at ACM ICMI 2021 about the perception of ultrasound haptics. One is about perceived motion in ultrasound haptic patterns [6]. The other is about using parametric audio effects alongside ultrasound haptic patterns to modulate perceived roughness [7]. Perception of ultrasound haptic patterns is a topic I’m interested in and wish I had more time to work on!

I collaborated with UltraLeap to explore alternative ways of using ultrasound arrays, including a paper about using ultrasound to wirelessly power tangible devices for mid-air tangible interactions [8].

I chaired sessions at the CHI 2016 and CHI 2018 workshops on mid-air haptics and displays, and have chaired paper sessions at CHI 2018 and CHI 2019 about touch and haptic interfaces. I’m also a co-editor of an upcoming book on ultrasound haptics, due to be published in 2022 – watch this space!

Gesture Interaction

My PhD research focused on gesture interaction and around-device interaction. Some of my early PhD work looked at above-device interaction with mobile phones [4, 9], which I discuss more here. Towards the end of my PhD I also studied gesture interaction with simple household devices, like thermostats and lights, which present interesting design problems due to their lack of screens or output capabilities [10].

I am particularly interested in how gesture interaction techniques can be improved with better feedback design. Whereas most gesture interfaces rely on visual feedback, I am more interested in non-visual modalities and how these can be used to help users interact more easily and effectively. I have looked at tactile feedback for gesture interfaces [4, 5]; this is a promising modality but requires novel hardware solutions to overcome the challenges of giving tactile feedback without physical contact with a device. I have also looked at other types of output, including sound and interactive light, for giving feedback during gesture interaction. My PhD research in this area was funded by a studentship from Nokia Research in Finland.

Despite significant advances in gesture-sensing technology, there are some fundamental usability problems which we still need good solutions for. My PhD thesis focused on one of these problems in particular, the problem of addressing gesture systems. My CHI 2016 paper [10] describes interaction techniques for addressing gesture systems. I’ve also looked at clutching interaction techniques for touchless gesture systems, which summarises research from our CHI 2022 paper on touchless gestures for medical imaging systems [11].

Above-Device Gestures

Early in my PhD I looked at above-device gesture design. We asked users to create above-device gestures for some common mobile phone tasks. From the many gesture designs gathered in that study, we then created and evaluated two sets of gestures. We created design recommendations for good above-device interfaces based on the outcomes of these studies [9].

Tactile Feedback for Gestures

Small devices, like mobile phones and wearables, have limited display capabilities. Gesture interaction, being very uncertain for users, requires feedback to help users gesture effectively, but giving feedback visually on small devices constraints other content. Instead, other modalities – like sound and touch – could be used to give feedback. However, an obvious limitation with touch feedback is that users don’t always touch devices that they gesture towards. We looked at how we could give tactile feedback during gesture interaction, using ultrasound haptics and distal feedback from wearables [4].

Interactive Light Feedback for Gestures

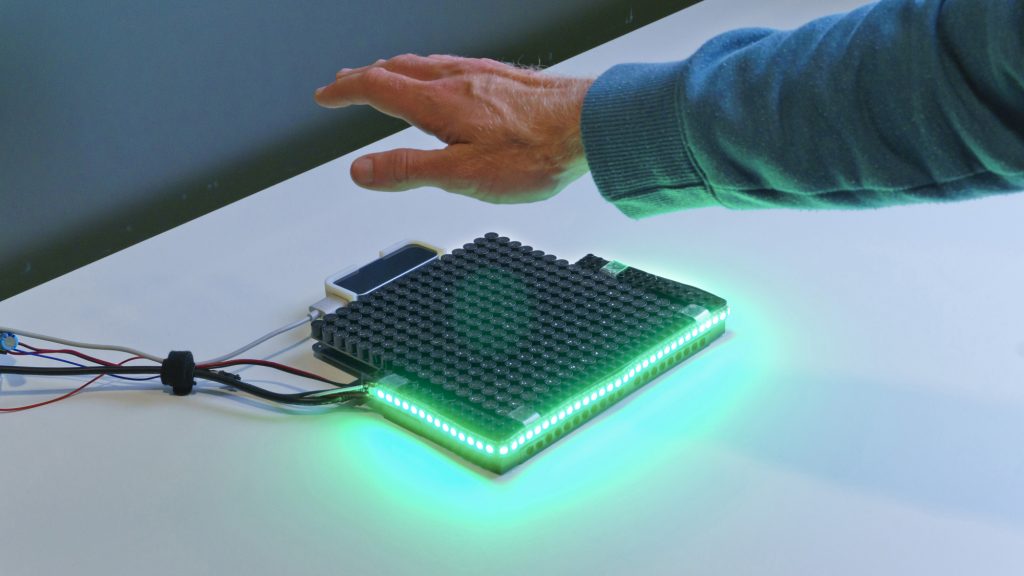

Another way of giving visual feedback on small devices without taking away limited screen space is to give visual cues in the space surrounding the device instead. We embedded LEDs in the edge of some devices so that they could illuminate surrounding table or wall surfaces, giving low fidelity – but effective – visual feedback about gestures. We call this interactive light feedback [12]. As well as keeping the screen free for interactive content, these interactive light cues were also noticeable from a short distance away. For more on this, see Interactive Light Feedback.

Wearables for Visually Impaired Children

I worked on the ABBI (Audio Bracelet for Blind Interaction) project for a year. The ABBI project developed a bracelet for young visually impaired children; when the bracelet moved, it synthesised sound in response to that movement. The primary purpose of the bracelet was for sensory rehabilitation activities to improve spatial cognition; by hearing how other people and themselves moved, the children would be able to improve their understanding of movement and their spatial awareness.

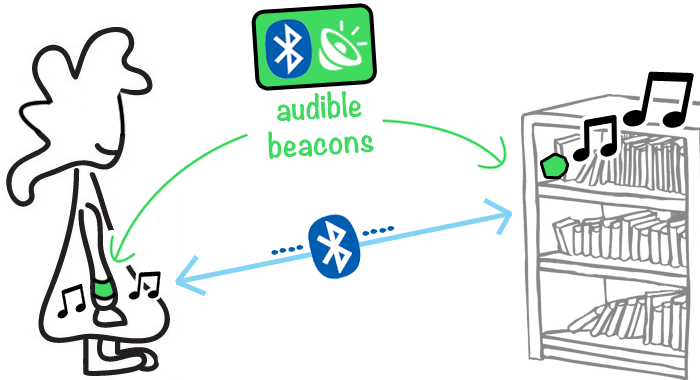

My research looked at how the capabilities of the ABBI bracelet could be used for other things. The bracelet had motion sensors, Bluetooth communication, on-board audio synthesis and limited processing power, so my research investigated how these might facilitate other interactions. Some of my work looked at how Bluetooth beacons could be used with a wearable device to present relevant audio cues about surroundings, to help visually impaired children understand what is happening nearby [13]. I also considered how the bracelet might be used to detect location and activity within the home, so that the lighting could be adapted to make it easier to see, or to draw attention to specific areas of the home [14].

Reminders: Tabletops and Digital Pens

Before starting my PhD I worked on two projects looking at home-care reminder systems for elderly people. Reminders can help people live independently by prompting them to do things, such as taking medication or making sure the heating is on, and helping them manage their lives, for example reminding them of upcoming appointments or tasks such as shopping.

Tabletops in the Home

My final undergraduate project looked at how interactive tabletops could be used to deliver reminders. People often have coffee tables in a prominent location within the living room, making the tabletop an ideal display for ambient information and reminders. We wanted to see what challenges had to be overcome in order for tabletops to be an effective reminder display. One of the interesting challenges this project addressed was how to use the tabletop as a display and as a normal table. Clutter meant large parts of the display were often occluded so a solution was needed to allow reminders to be placed in a noticeable location. Part of this project was presented as an extended abstract at CHI 2013 [15].

Digital Pen and Paper Reminders

After graduating with my undergraduate degree, I worked on the MultiMemoHome project as a research assistant. My role in the project was to design and develop a paper-based diary system for digital pens which let users schedule reminders using pen and paper. Reminders were then delivered using a tablet placed in the living room. We were interested in using a paper-based approach because this was an approach already favoured by elderly people. We used a co-design approach to create a reminder system, Rememo, which we then deployed in peoples’ homes for two weeks at a time. This project was presented as an extended abstract at CHI 2013 [16] and as a workshop paper at Mobile HCI 2014 [17].

Predicting Visual Complexity

As an undergraduate I received two scholarships to fund research over my summer holidays. One of these scholarships funded research with Helen Purchase into visual complexity. We wanted to find out if we could predict how complex visual content was using image processing techniques to examine images. We gathered both rankings and ratings of visual complexity using an online survey and used this information to construct a model using linear regression with a collection of image metrics as predictors. This project was presented at Diagrammatic Representation and Inference 2012 [18] and Predicting Perceptions 2012 [19].

Aesthetic Properties of Graphs

An earlier research scholarship also funded research with Helen Purchase, this time looking at aesthetic properties of hand-drawn graphs using SketchNode, a tool which lets users draw graphs using a stylus. We devised a series of aesthetic properties describing graph appearance and created algorithms to measure these properties. Aesthetic properties included features such as node orthogonality (were nodes placed in a grid-like manner?), edge length consistency (were edges of similar length?) and edge orthogonality (were edges largely perpendicular and arranged in a grid-like manner?). I produced a tool to analyse a large corpus of user-drawn graphs from earlier research studies.

References

[1] Levitating Particle Displays with Interactive Voxels

E. Freeman, J. Williamson, P. Kourtelos, and S. Brewster.

In Proceedings of the 7th ACM International Symposium on Pervasive Displays – PerDis ’18, Article 15. 2018.

@inproceedings{PerDis2018,

author = {Freeman, Euan and Williamson, Julie and Kourtelos, Praxitelis and Brewster, Stephen},

booktitle = {{Proceedings of the 7th ACM International Symposium on Pervasive Displays - PerDis '18}},

title = {{Levitating Particle Displays with Interactive Voxels}},

year = {2018},

publisher = {ACM},

pages = {Article 15},

doi = {10.1145/3205873.3205878},

url = {http://euanfreeman.co.uk/levitate/levitating-particle-displays/},

pdf = {http://research.euanfreeman.co.uk/papers/PerDis_2018.pdf},

}[2] Point-and-Shake: Selecting from Levitating Object Displays

E. Freeman, J. Williamson, S. Subramanian, and S. Brewster.

In Proceedings of the 36th Annual ACM Conference on Human Factors in Computing Systems – CHI ’18, Paper 18. 2018.

@inproceedings{CHI2018,

author = {Freeman, Euan and Williamson, Julie and Subramanian, Sriram and Brewster, Stephen},

booktitle = {{Proceedings of the 36th Annual ACM Conference on Human Factors in Computing Systems - CHI '18}},

title = {{Point-and-Shake: Selecting from Levitating Object Displays}},

year = {2018},

publisher = {ACM},

pages = {Paper 18},

doi = {10.1145/3173574.3173592},

url = {http://euanfreeman.co.uk/levitate/},

video = {{https://www.youtube.com/watch?v=j8foZ5gahvQ}},

pdf = {http://research.euanfreeman.co.uk/papers/CHI_2018.pdf},

data = {https://zenodo.org/record/2541555},

}[3] A Survey of Mid-Air Ultrasound Haptics and Its Applications

I. Rakkolainen, E. Freeman, A. Sand, R. Raisamo, and S. Brewster.

IEEE Transactions on Haptics, vol. 14, pp. 2-19, 2020.

@article{ToHSurvey,

author = {Rakkolainen, Ismo and Freeman, Euan and Sand, Antti and Raisamo, Roope and Brewster, Stephen},

title = {{A Survey of Mid-Air Ultrasound Haptics and Its Applications}},

year = {2020},

publisher = {IEEE},

journal = {IEEE Transactions on Haptics},

volume = {14},

issue = {1},

pages = {2--19},

pdf = {http://research.euanfreeman.co.uk/papers/IEEE_ToH_2020.pdf},

doi = {10.1109/TOH.2020.3018754},

url = {https://ieeexplore.ieee.org/document/9174896},

issn = {2329-4051},

}[4] Tactile Feedback for Above-Device Gesture Interfaces: Adding Touch to Touchless Interactions

E. Freeman, S. Brewster, and V. Lantz.

In Proceedings of the International Conference on Multimodal Interaction – ICMI ’14, 419-426. 2014.

@inproceedings{ICMI2014,

author = {Freeman, Euan and Brewster, Stephen and Lantz, Vuokko},

booktitle = {Proceedings of the International Conference on Multimodal Interaction - ICMI '14},

pages = {419--426},

publisher = {ACM},

title = {{Tactile Feedback for Above-Device Gesture Interfaces: Adding Touch to Touchless Interactions}},

pdf = {http://research.euanfreeman.co.uk/papers/ICMI_2014.pdf},

doi = {10.1145/2663204.2663280},

year = {2014},

url = {http://euanfreeman.co.uk/projects/above-device-tactile-feedback/},

video = {{https://www.youtube.com/watch?v=K1TdnNBUFoc}},

}[5] HaptiGlow: Helping Users Position their Hands for Better Mid-Air Gestures and Ultrasound Haptic Feedback

E. Freeman, D. Vo, and S. Brewster.

In Proceedings of IEEE World Haptics Conference 2019, the 8th Joint Eurohaptics Conference and the IEEE Haptics Symposium, TP2A.09. 2019.

@inproceedings{WHC2019,

author = {Freeman, Euan and Vo, Dong-Bach and Brewster, Stephen},

booktitle = {{Proceedings of IEEE World Haptics Conference 2019, the 8th Joint Eurohaptics Conference and the IEEE Haptics Symposium}},

title = {{HaptiGlow: Helping Users Position their Hands for Better Mid-Air Gestures and Ultrasound Haptic Feedback}},

year = {2019},

publisher = {IEEE},

pages = {TP2A.09},

doi = {10.1109/WHC.2019.8816092},

url = {http://euanfreeman.co.uk/haptiglow/},

pdf = {http://research.euanfreeman.co.uk/papers/WHC_2019.pdf},

data = {https://zenodo.org/record/2631398},

video = {https://www.youtube.com/watch?v=hXCH-xBgnig},

}[6] Perception of Ultrasound Haptic Focal Point Motion

E. Freeman and G. Wilson.

In Proceedings of 23rd ACM International Conference on Multimodal Interaction – ICMI ’21, 697-701. 2021.

@inproceedings{ICMI2021Motion,

author = {Freeman, Euan and Wilson, Graham},

booktitle = {{Proceedings of 23rd ACM International Conference on Multimodal Interaction - ICMI '21}},

title = {{Perception of Ultrasound Haptic Focal Point Motion}},

year = {2021},

publisher = {ACM},

pages = {697--701},

doi = {10.1145/3462244.3479950},

url = {http://euanfreeman.co.uk/perception-of-ultrasound-haptic-focal-point-motion/},

pdf = {http://research.euanfreeman.co.uk/papers/ICMI_2021_Motion.pdf},

data = {https://zenodo.org/record/5142587},

}[7] Enhancing Ultrasound Haptics with Parametric Audio Effects

E. Freeman.

In Proceedings of 23rd ACM International Conference on Multimodal Interaction – ICMI ’21, 692-696. 2021.

@inproceedings{ICMI2021AudioHaptic,

author = {Freeman, Euan},

booktitle = {{Proceedings of 23rd ACM International Conference on Multimodal Interaction - ICMI '21}},

title = {{Enhancing Ultrasound Haptics with Parametric Audio Effects}},

year = {2021},

publisher = {ACM},

pages = {692--696},

doi = {10.1145/3462244.3479951},

url = {http://euanfreeman.co.uk/enhancing-ultrasound-haptics-with-parametric-audio-effects/},

pdf = {http://research.euanfreeman.co.uk/papers/ICMI_2021_AudioHaptic.pdf},

data = {https://zenodo.org/record/5144878},

}[8] UltraPower: Powering Tangible & Wearable Devices with Focused Ultrasound

R. Morales Gonzalez, A. Marzo, E. Freeman, W. Frier, and O. Georgiou.

In Proceedings of the Fifteenth International Conference on Tangible, Embedded, and Embodied Interaction – TEI ’21, Article 1. 2021.

@inproceedings{TEI2021,

author = {Morales Gonzalez, Rafael and Marzo, Asier and Freeman, Euan and Frier, William and Georgiou, Orestis},

booktitle = {{Proceedings of the Fifteenth International Conference on Tangible, Embedded, and Embodied Interaction - TEI '21}},

title = {{UltraPower: Powering Tangible & Wearable Devices with Focused Ultrasound}},

year = {2021},

publisher = {ACM},

pages = {Article 1},

doi = {10.1145/3430524.3440620},

pdf = {http://research.euanfreeman.co.uk/papers/TEI_2021.pdf},

url = {http://euanfreeman.co.uk/ultrapower-powering-tangible-wearable-devices-with-focused-ultrasound/},

}[9] Towards Usable and Acceptable Above-Device Interactions

E. Freeman, S. Brewster, and V. Lantz.

In Mobile HCI ’14 Posters, 459-464. 2014.

@inproceedings{MobileHCI2014Poster,

author = {Freeman, Euan and Brewster, Stephen and Lantz, Vuokko},

booktitle = {Mobile HCI '14 Posters},

pages = {459--464},

publisher = {ACM},

title = {Towards Usable and Acceptable Above-Device Interactions},

pdf = {http://research.euanfreeman.co.uk/papers/MobileHCI_2014_Poster.pdf},

doi = {10.1145/2628363.2634215},

year = {2014},

}[10] Do That, There: An Interaction Technique for Addressing In-Air Gesture Systems

E. Freeman, S. Brewster, and V. Lantz.

In Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems – CHI ’16, 2319-2331. 2016.

@inproceedings{CHI2016,

author = {Freeman, Euan and Brewster, Stephen and Lantz, Vuokko},

booktitle = {{Proceedings of the 34th Annual ACM Conference on Human Factors in Computing Systems - CHI '16}},

title = {{Do That, There: An Interaction Technique for Addressing In-Air Gesture Systems}},

year = {2016},

publisher = {ACM},

pages = {2319--2331},

doi = {10.1145/2858036.2858308},

pdf = {http://research.euanfreeman.co.uk/papers/CHI_2016.pdf},

url = {http://euanfreeman.co.uk/gestures/},

video = {{https://www.youtube.com/watch?v=6_hGbI_SdQ4}},

}[11] Investigating Clutching Interactions for Touchless Medical Imaging Systems

S. Cronin, E. Freeman, and G. Doherty.

In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. 2022.

@inproceedings{CHI2022Clutching,

title = {Investigating Clutching Interactions for Touchless Medical Imaging Systems},

author = {Cronin, Sean and Freeman, Euan and Doherty, Gavin},

doi = {10.1145/3491102.3517512},

publisher = {Association for Computing Machinery},

booktitle = {Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems},

numpages = {14},

series = {CHI '22},

year = {2022},

video = {https://www.youtube.com/watch?v=MFznaMUG_DU},

pdf = {http://research.euanfreeman.co.uk/papers/CHI_2022_Clutching.pdf},

}[12] Illuminating Gesture Interfaces with Interactive Light Feedback

E. Freeman, S. Brewster, and V. Lantz.

In Proceedings of NordiCHI ’14 Beyond the Switch Workshop. 2014.

@inproceedings{NordiCHI2014Workshop,

author = {Freeman, Euan and Brewster, Stephen and Lantz, Vuokko},

booktitle = {Proceedings of NordiCHI '14 Beyond the Switch Workshop},

title = {{Illuminating Gesture Interfaces with Interactive Light Feedback}},

year = {2014},

pdf = {http://lightingworkshop.files.wordpress.com/2014/09/3-illuminating-gesture-interfaces-with-interactive-light-feedback.pdf},

url = {http://euanfreeman.co.uk/interactive-light-feedback/},

}[13] Audible Beacons and Wearables in Schools: Helping Young Visually Impaired Children Play and Move Independently

E. Freeman, G. Wilson, S. Brewster, G. Baud-Bovy, C. Magnusson, and H. Caltenco.

In Proceedings of the 35th Annual ACM Conference on Human Factors in Computing Systems – CHI ’17, 4146-4157. 2017.

@inproceedings{CHI2017,

author = {Freeman, Euan and Wilson, Graham and Brewster, Stephen and Baud-Bovy, Gabriel and Magnusson, Charlotte and Caltenco, Hector},

booktitle = {{Proceedings of the 35th Annual ACM Conference on Human Factors in Computing Systems - CHI '17}},

title = {{Audible Beacons and Wearables in Schools: Helping Young Visually Impaired Children Play and Move Independently}},

year = {2017},

publisher = {ACM},

pages = {4146--4157},

doi = {10.1145/3025453.3025518},

url = {http://euanfreeman.co.uk/research/#abbi},

video = {{https://www.youtube.com/watch?v=SGQmt1NeAGQ}},

pdf = {http://research.euanfreeman.co.uk/papers/CHI_2017.pdf},

}[14] Towards a Multimodal Adaptive Lighting System for Visually Impaired Children

E. Freeman, G. Wilson, and S. Brewster.

In Proceedings of the 18th ACM International Conference on Multimodal Interaction – ICMI ’16, 398-399. 2016.

@inproceedings{ICMI2016Demo1,

author = {Freeman, Euan and Wilson, Graham and Brewster, Stephen},

booktitle = {{Proceedings of the 18th ACM International Conference on Multimodal Interaction - ICMI '16}},

title = {{Towards a Multimodal Adaptive Lighting System for Visually Impaired Children}},

year = {2016},

publisher = {ACM},

pages = {398--399},

doi = {10.1145/2993148.2998521},

pdf = {http://research.euanfreeman.co.uk/papers/ICMI_2016.pdf},

}[15] Messy Tabletops: Clearing Up the Occlusion Problem

E. Freeman and S. Brewster.

In CHI ’13 Extended Abstracts on Human Factors in Computing Systems, 1515-1520. 2013.

@inproceedings{CHI2013LBW1,

author = {Freeman, Euan and Brewster, Stephen},

booktitle = {CHI '13 Extended Abstracts on Human Factors in Computing Systems},

pages = {1515--1520},

publisher = {ACM},

title = {Messy Tabletops: Clearing Up the Occlusion Problem},

pdf = {http://dl.acm.org/authorize?6811198},

doi = {10.1145/2468356.2468627},

year = {2013},

url = {http://euanfreeman.co.uk/projects/occlusion-management/},

video = {https://www.youtube.com/watch?v=V42GYxnHkEk},

}[16] Designing a Smartpen Reminder System for Older Adults

J. Williamson, M. McGee-Lennon, E. Freeman, and S. Brewster.

In CHI ’13 Extended Abstracts on Human Factors in Computing Systems, 73-78. 2013.

@inproceedings{CHI2013LBW2,

author = {Williamson, Julie and McGee-Lennon, Marilyn and Freeman, Euan and Brewster, Stephen},

booktitle = {CHI '13 Extended Abstracts on Human Factors in Computing Systems},

pages = {73--78},

publisher = {ACM},

title = {Designing a Smartpen Reminder System for Older Adults},

pdf = {http://dl.acm.org/authorize?6811896},

doi = {10.1145/2468356.2468371},

year = {2013},

}[17] Rememo: Designing a Multimodal Mobile Reminder App with and for Older Adults

M. Lennon, G. Hamilton, E. Freeman, and J. Williamson.

In Mobile HCI ’14 Workshop on Re-imagining Commonly Used Mobile Interfaces for Older Adults. 2014.

@inproceedings{MobileHCI2014Workshop,

author = {Lennon, Marilyn and Hamilton, Greig and Freeman, Euan and Williamson, Julie},

booktitle = {Mobile HCI '14 Workshop on Re-imagining Commonly Used Mobile Interfaces for Older Adults},

publisher = {ACM},

title = {{Rememo: Designing a Multimodal Mobile Reminder App with and for Older Adults}},

year = {2014},

url = {http://olderadultsmobileinterfaces.wordpress.com/},

}[18] An Exploration of Visual Complexity

H. C. Purchase, E. Freeman, and J. Hamer.

In Diagrammatic Representation and Inference, 200-213. 2012.

@inproceedings{Diagrams2012,

author = {Purchase, Helen C. and Freeman, Euan and Hamer, John},

booktitle = {Diagrammatic Representation and Inference},

doi = {10.1007/978-3-642-31223-6_22},

isbn = {978-3-642-31222-9},

pages = {200--213},

title = {An Exploration of Visual Complexity},

pdf = {http://www.springerlink.com/index/G647230842J38T43.pdf},

year = {2012},

}[19] Predicting Visual Complexity

H. C. Purchase, E. Freeman, and J. Hamer.

In Predicting Perceptions: The 3rd International Conference on Appearance., 62-65. 2012.

@inproceedings{Perceptions2012,

author = {Purchase, Helen C. and Freeman, Euan and Hamer, John},

booktitle = {Predicting Perceptions: The 3rd International Conference on Appearance.},

isbn = {9781471668692},

pages = {62--65},

title = {Predicting Visual Complexity},

pdf = {http://opendepot.org/1060/},

year = {2012},

}