Overview

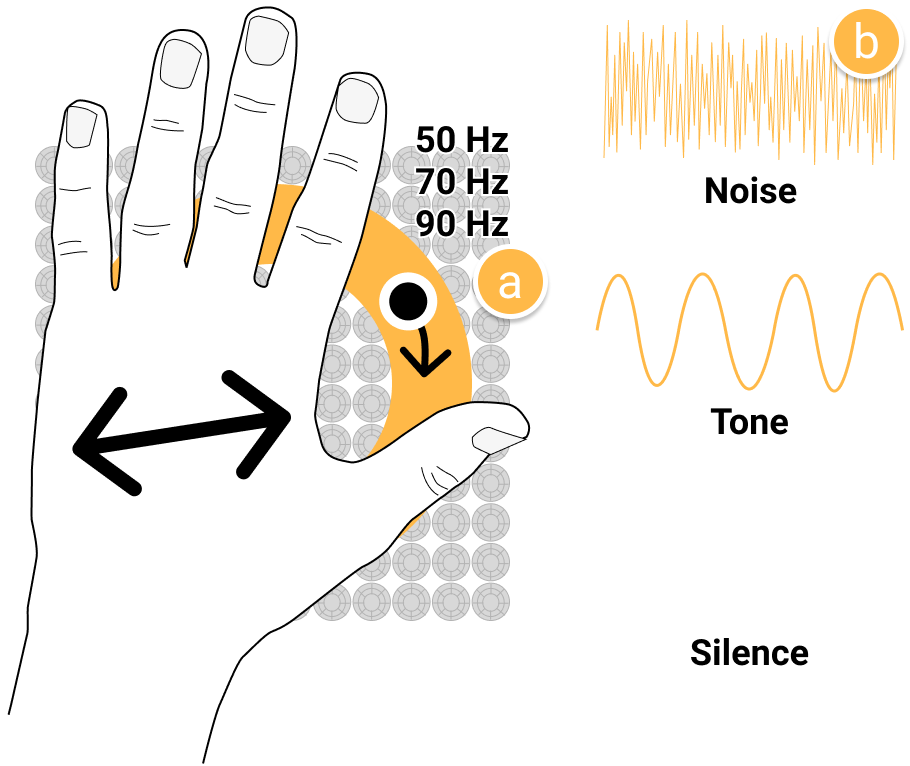

Ultrasound haptic devices can create parametric audio as well as contactless haptic feedback. In a paper at the 2021 ACM International Conference on Multimodal Interaction, I investigated if multimodal output from these devices can influence the perception of haptic feedback. I used a magnitude estimation experiment to evaluate perceived roughness of an ultrasound haptic pattern.

Results suggest that white noise audio from the haptics device increased perceived roughness and pure tones did not, and that lower rendering frequencies may increase perceived roughness.

Our results show that multimodal output has the potential to expand the range of sensations that can be presented by an ultrasound haptic device, paving the way to richer mid-air haptic interfaces.

Enhancing Ultrasound Haptics with Parametric Audio Effects

E. Freeman.

In Proceedings of 23rd ACM International Conference on Multimodal Interaction – ICMI ’21, 692-696. 2021.

@inproceedings{ICMI2021AudioHaptic,

author = {Freeman, Euan},

booktitle = {{Proceedings of 23rd ACM International Conference on Multimodal Interaction - ICMI '21}},

title = {{Enhancing Ultrasound Haptics with Parametric Audio Effects}},

year = {2021},

publisher = {ACM},

pages = {692--696},

doi = {10.1145/3462244.3479951},

url = {http://euanfreeman.co.uk/enhancing-ultrasound-haptics-with-parametric-audio-effects/},

pdf = {http://research.euanfreeman.co.uk/papers/ICMI_2021_AudioHaptic.pdf},

data = {https://zenodo.org/record/5144878},

}Acknowledgements

This research has received funding from the 🇪🇺 European Union’s Horizon 2020 research and innovation programme under grant agreement #737087. This work was completed as part of the Levitate project.