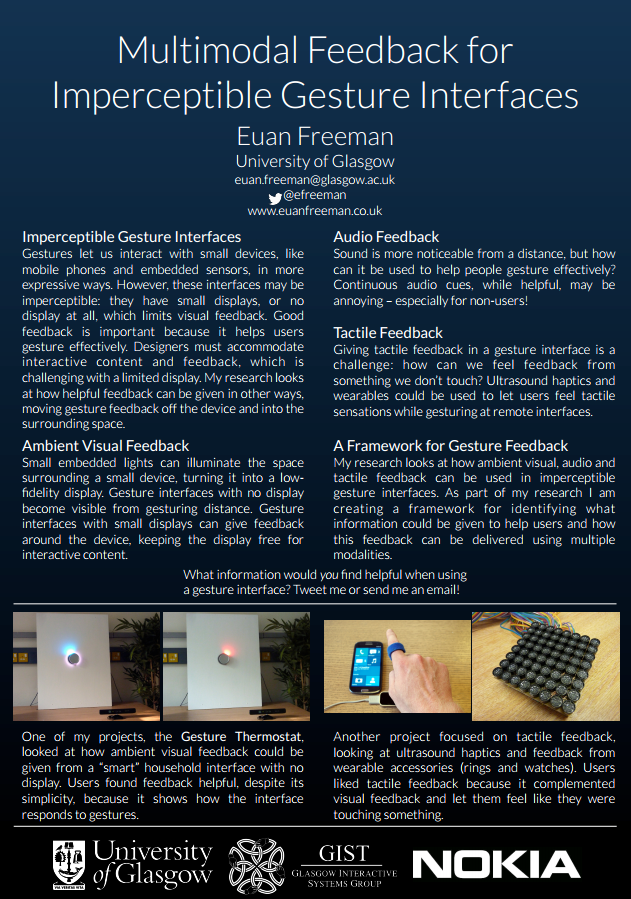

One of my biggest research interests is gesture interaction with mobile devices, also known as around-device interaction because users interact in the space around the device rather than on the device itself. In this post I’m going to give a brief overview of what around-device interaction is, how gestures can be sensed from mobile devices and how these interactions are being realised in commercial devices.

Why Use Around-Device Interaction?

Why would we want to gesture with mobile devices (such as phones or smart watches) anyway? These devices typically have small screens which we interact with in a very limited fashion; using the larger surrounding space lets us interact in more expressive ways and lets the display be utilised fully, rather than our hand occluding content as we reach out to touch the screen. Gestures also let us interact without having to first lift our device, meaning we can interact casually from a short distance. Finally, gesture input is non-contact so we can interact when we would not want to touch the screen, e.g. when preparing food and wanting to navigate a recipe but our hands are messy.

Sensing Around-Device Input

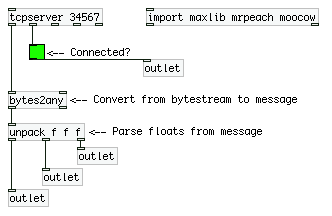

Motivated by the benefits of expressive non-contact input, HCI researchers have developed a variety of approaches for detecting around-device input. Early approaches used infrared proximity sensors, similar to the sensors used in phones to lock the display when we hold our phone to our ear. SideSight (Butler et al. 2008) placed proximity sensors around the edges of a mobile phone, letting users interact in the space beside the phone. HoverFlow (Kratz and Rohs 2009) took a similar approach, although their sensors faced upwards rather than outwards. This let users gesture above the display. Although this meant gesturing occluded the screen, users could interact in 3D space; a limitation of SideSight was that users were more or less restricted to a flat plane around the phone.

Abracadabra (Harrison and Hudson 2009) used magnetic sensing to detect input around a smart-watch. Users wore a magnetic ring which affected the magnetic field around the device, letting the watch determine finger position and detect gestures. This let users interact with a very small display in a much larger area (an example of what Harrison called “interacting with small devices in a big way” when he gave a presentation to our research group last year) – something today’s smart-watch designers should consider. uTrack (Chen et al. 2013) built on this approach with additional wearable sensors. MagiTact (Ketabdar et al. 2010) used a similar approach to Abracadabra for detecting gestures around mobile phones.

So far we’ve looked at two approaches for detecting around-device input: infrared proximity sensors and magnetic sensors. Researchers have developed camera-based approaches for detecting input. Most mobile phone cameras can be used to detect around-device gestures within the camera field of view, which can be extended using approaches such as Surround-see (Yang et al. 2013). Surround-see placed an omni-directional lens over the camera, giving the phone a complete view of its surrounding environment. Users could then gesture from even further away (e.g. across the room) because of the complete field of view.

Others have proposed using depth cameras for more accurate camera-based hand tracking. I was excited when Google revealed Project Tango earlier this year because a mobile phone with a depth sensor and processing resources dedicated to computer vision is a step closer to realising this type of interaction. While mobile phones can already detect basic gestures using their magnetic sensors and cameras, depth cameras, in my opinion, would allow more expressive gestures without having to wear anything (e.g. magnetic accessories).

We’re also now seeing low-powered alternative sensing approaches, such as AllSee (Kellogg et al. 2014) which can detect gestures using ambient wireless signals. These approaches could be ideal for wearables which are constrained by small battery sizes. Low-power sensing could also allow always-on gesture sensing; this is currently too demanding with some around-device sensing approaches.

Commercial Examples

I have so far discussed a variety of sensing approaches found in research; this is by no means a comprehensive survey of around-device gesture recognition although it shows the wide variety of approaches possible and identifies some seminal work in this area. Now I will look at some commercial examples of around-device interfaces to show that there is an interest in moving interaction away from the touch-screen and into the around-device space.

Perhaps the best known around-device interface is the Samsung Galaxy S4. Samsung included features called Air View and Air Gesture which let users gesture above the display without having to touch it. Users could hover over images in a gallery to see a larger preview and could navigate through a photo album by swiping over the display. A limitation of the Samsung implementation was that users had to be quite close to the display for gestures to be detected – so close that they may as well have used touch input!

Nokia also included an around-device gesture in an update for some of their Lumia phones last year. Users could peek at their notifications by holding their hand over the proximity sensor briefly. While just a single gesture, this let users check their phones easily without unlocking them. With young smartphone users reportedly checking their phones more than thirty times per day (BBC Newsbeat, 4th April 2014), this is a gesture that could get a lot of use!

There are also a number of software libraries which use the front-facing camera to detect gesture input, allowing around-device interaction on typical mobile phones.

Conclusion

In this post we took a quick look at around-device interaction. This is still an active research area and one where we are seeing many interesting developments – especially as researchers are now focusing on issues other than sensing approaches. With smartphone developers showing an interest in this modality, identifying and overcoming interaction challenges is the next big step in around-device interaction research.