Introduction

My PhD research looks at improving gesture interaction with small devices, like mobile phones, using multimodal feedback. One of the first things I looked at in my PhD was tactile feedback for above-device interfaces. Above-device interaction is gesture interaction over a device; for example, users can gesture at a phone on a table in front of them to dismiss unwanted interruptions or could gesture over a tablet on the kitchen counter to navigate a recipe. I look at above-device gesture interaction in more detail in my Mobile HCI ’14 poster paper [1], which gives a quick overview of some prior work on above-device interaction.

In two studies, described in my ICMI ’14 paper [2], we looked at how above-device interfaces could give tactile feedback. Giving tactile feedback during gestures is a challenge because users don’t touch the device they are gesturing at; tactile feedback would go unnoticed unless users were holding the device while they gestured. We looked at ultrasound haptics and distal tactile feedback from wearables. In our studies, users interacted with a mobile phone interface (pictured above) which used a Leap Motion to track two selection gestures.

Gestures

Our studies looked at two selection gestures: Count (above) and Point (below). These gestures were from our user-designed gesture study [1]. With Count, users select from numbered targets by extending the appropriate number of fingers. When there’s more than five targets, we partition targets into groups. Users can select from a group by moving their hand. In the image above, the palm position is closest to the bottom half of the screen so we activate the lower group of targets. If users moved their hands towards the upper half of the screen, we would activate the upper group of four targets. Users had to hold a Count gesture for 1000 ms to make a selection.

With Point, users controlled a cursor which was mapped to their finger position relative to the device. We used the space beside the device to avoid occluding the screen while gesturing. Users made selections by dwelling the cursor over a target for 1000 ms.

For a video demo of these gestures, see:

Tactile Feedback

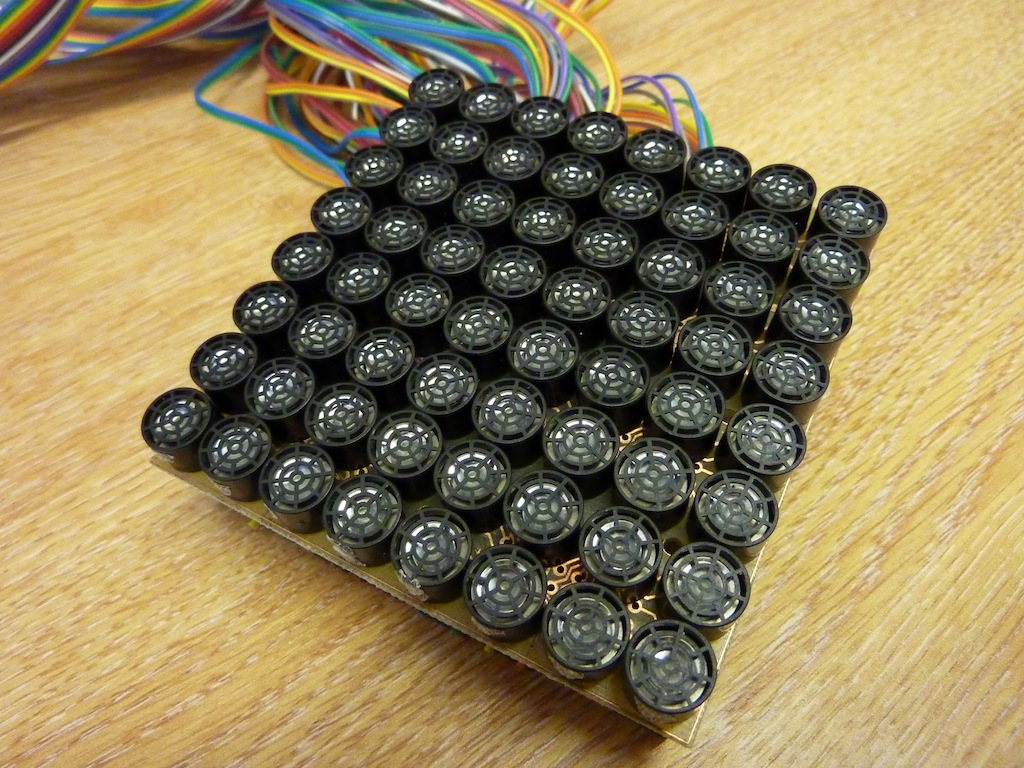

In our first study we looked at different ways of giving tactile feedback. We compared feedback directly from the device when held, ultrasound haptics (using an array of ultrasound transducers, below) and distal feedback from wearable accessories. We used two wearable tactile feedback prototypes: a “watch” and a “ring” (vibrotactile actuators affixed to a watch strap and an adjustable velcro ring). We found that all were effective for giving feedback, although participants had divided preferences.

Some preferred feedback directly from the phone because it was familiar, although this is an unlikely case in above-device interaction because an advantage of this interaction modality is that users don’t need to first lift the phone or reach out to touch it. Some participants liked feedback from our ring prototype because it was close to the point of interaction (when using Point) and others preferred feedback from the watch (pictured below) because it was a more acceptable accessory than a vibrotactile ring. An advantage of ultrasound haptics is that users do not need to wear any accessories and participants appreciated this, although the feedback was less noticeable than vibrotactile feedback. This was partly because of the small ultrasound array used (similar size to a mobile phone) and partly because of the nature of ultrasound haptics.

In a second study we focused on feedback given on the wrist using our watch prototype. We were interested to see how tactile feedback affected interaction using our Point and Count gestures. We looked at three tactile feedback designs in addition to just visual feedback. Tactile feedback had no impact on performance (possibly because selection was too easy) although it had a significant positive effect on workload. Workload (measured using NASA-TLX) was significantly lower when dynamic tactile feedback was given. Users also preferred to receive tactile feedback to no tactile feedback.

A more detailed qualitative analysis and the results of both studies appear in our ICMI 2014 paper [2]. A position paper [3] from the CHI 2016 workshop on mid-air haptics and displays describes this work in the broader context of research towards more usable mid-air widgets.

Tactile Feedback Source Code

A Pure Data patch for generating our tactile feedback designs is available here.

References

[1] Towards Usable and Acceptable Above-Device Interactions

E. Freeman, S. Brewster, and V. Lantz.

In Mobile HCI ’14 Posters, 459-464. 2014.

@inproceedings{MobileHCI2014Poster,

author = {Freeman, Euan and Brewster, Stephen and Lantz, Vuokko},

booktitle = {Mobile HCI '14 Posters},

pages = {459--464},

publisher = {ACM},

title = {Towards Usable and Acceptable Above-Device Interactions},

pdf = {http://research.euanfreeman.co.uk/papers/MobileHCI_2014_Poster.pdf},

doi = {10.1145/2628363.2634215},

year = {2014},

}[2] Tactile Feedback for Above-Device Gesture Interfaces: Adding Touch to Touchless Interactions

E. Freeman, S. Brewster, and V. Lantz.

In Proceedings of the International Conference on Multimodal Interaction – ICMI ’14, 419-426. 2014.

@inproceedings{ICMI2014,

author = {Freeman, Euan and Brewster, Stephen and Lantz, Vuokko},

booktitle = {Proceedings of the International Conference on Multimodal Interaction - ICMI '14},

pages = {419--426},

publisher = {ACM},

title = {{Tactile Feedback for Above-Device Gesture Interfaces: Adding Touch to Touchless Interactions}},

pdf = {http://research.euanfreeman.co.uk/papers/ICMI_2014.pdf},

doi = {10.1145/2663204.2663280},

year = {2014},

url = {http://euanfreeman.co.uk/projects/above-device-tactile-feedback/},

video = {{https://www.youtube.com/watch?v=K1TdnNBUFoc}},

}[3] Towards Mid-Air Haptic Widgets

E. Freeman, D. Vo, G. Wilson, G. Shakeri, and S. Brewster.

In CHI 2016 Workshop on Mid-Air Haptics and Displays: Systems for Un-instrumented Mid-Air Interactions. 2016.

@inproceedings{MidAirHapticsWorkshop,

author = {Freeman, Euan and Vo, Dong-Bach and Wilson, Graham and Shakeri, Gozel and Brewster, Stephen},

booktitle = {CHI 2016 Workshop on Mid-Air Haptics and Displays: Systems for Un-instrumented Mid-Air Interactions},

title = {{Towards Mid-Air Haptic Widgets}},

year = {2016},

pdf = {http://research.euanfreeman.co.uk/papers/MidAirHaptics.pdf},

}